Site indexing

Is search engine a database? That is the first question to answer. If yes, then how it collects your data and your content. Simply listing website addresses (domain names) isn't sufficient these days, because of certain attribution which search engines assign to the domain names.

The list of domain names is long, but the content related to is different. The search engine algorithms may distinguish:

- type - text, image, video,

- structure - xml, etc

- qualities of the content - popularity, etc

- relevance

- reference to the search query, etc

Cognitive search

As human mind improves in content creation, the technical side of it complicates. The search engine management requires more scripting: by attributes, relevance, PR, artificial intelligence predictions, etc. Such developments are implemented mostly by big companies as Google, IBM, Microsoft (not a secret why). The latter e.g. introduces the Azure Cognitive Search as an advanced type of content aggregation.

Such engine stores the Cognitive Skillset of the user, basically learning the character of you, the person who surfs the Internet. Exploring the data you consume or create, include Synonym Maps and other aspects induced in Azure.

Extending right at the science-fiction premise of the neuron networking, the Open Neuron Network Exchange - ONNX is possible today. Commonly explained as an SQL prediction, PREDICT T-SQL function.

Semantic coding of the data queries implies that the logic-based languages (Python, C, Haskell, Java, etc) should be based upon mathematical principles of probability. In early stages of our career, we have discussed the Bayesian type inference and the data modeling in imaginary number probability. A shoddy attempt to 'imitate' science, thus most of it is getting real in today.

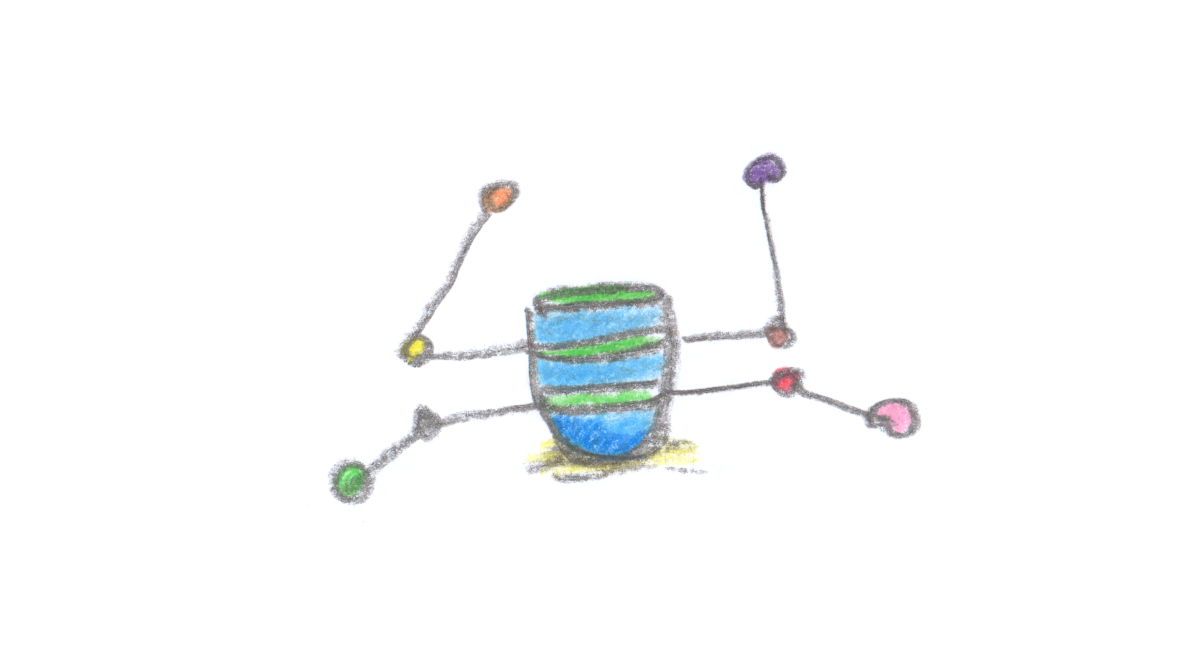

Search engines advance in prediction not only on product-preference base, but on the anticipatory skills it learns itself. Such probability modules allow to use the machine behaviour not only for indexing. The narrow artificial intelligence NAI, would suffice for the basic queries and database management.

Exclusions and servers

From early principles of Gaussian exclusions (which we know briefly), we understand the binary operation of choice and probability, hence the networking based databases. Another example of radical database improvement is the IBM Db2 - the AI database for "data driven insights".

All of this brings us to the cognitive computation, AI programming and sciences far more complicated than just content creation. Thus, we are assured that the content management and aggregation is getting future proof. Whether it is affordable is a different question, but the SEO and content writing remains pivotal.

Creative writing and content aggregation... manually

The latest perks of the AI are mostly the enterprise features, and the beginner bloggers are basically left to their own devices. Nevertheless, there are available tools for content spread. Aggregators worthy of mentioning:

- feedly

- mix

- innoreader

- instapaper

- curator

- digg, etc

The aggregators of your content are merely 'scrapers' of what you have created in exchange for base promotion, therefore the database side is on your own expense. Managing clear data, having technical skills on server side + being creative in writing, design and video production may outline your future plan. SEO tools and analysis are available at vast, but quality content is something to strive for.